Data Validation Node

The Data Validation Node checks incoming data against a JSON Schema before passing it to downstream nodes. This ensures only well-structured, expected data flows through your pipeline, reducing errors and improving reliability.

Configuration

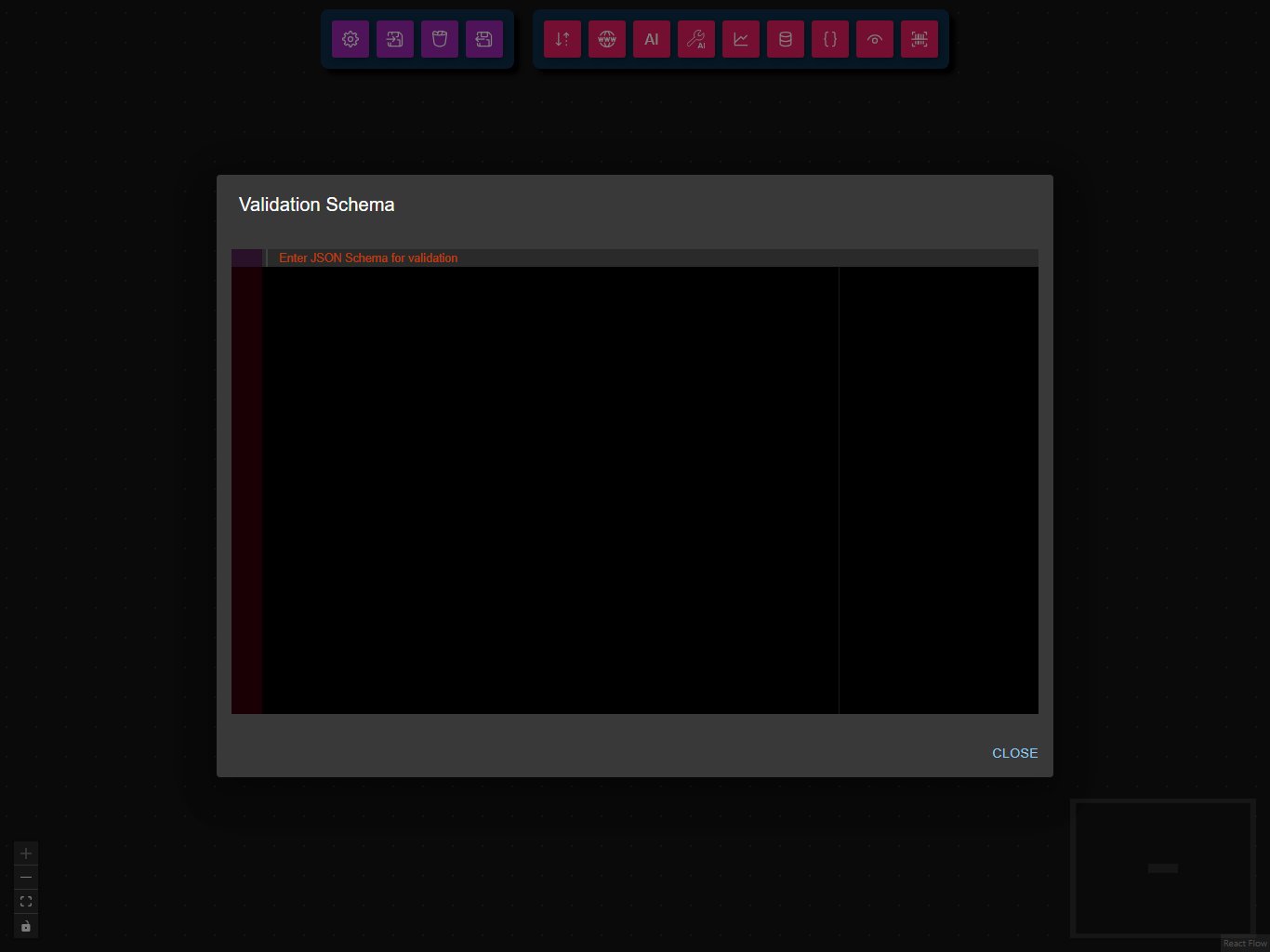

- Schema

Enter a JSON Schema to validate incoming data.

Example

{

"type": "object",

"properties": {

"products": {

"type": "array",

"items": {

"type": "object",

"required": ["id", "title", "price"],

"properties": {

"id": { "type": "integer" },

"title": { "type": "string" },

"price": { "type": "number" }

}

}

}

},

"required": ["products"]

}

Example Usage

For example usage, see the Product Data Validation and Visualization workflow, which demonstrates fetching API data, validating it, and visualizing the results.

Common Use Cases

- API Response Validation: Ensure third-party API responses match expected formats.

- Input Sanitization: Prevent malformed or incomplete data from entering your workflow.

- Data Quality Assurance: Enforce required fields and data types.

- Error Prevention: Catch issues early and provide feedback for correction.

Best Practices

- Test your schema: Use tools like JSON Schema Validator to verify your schema.

- Be specific: Define required fields and types to catch subtle errors.

- Handle errors: Use downstream nodes to handle validation failures gracefully.

- Keep schemas up to date: Update schemas as your data structures evolve.

Troubleshooting

Common Issues

- Invalid schema: Ensure your schema is valid JSON and follows the JSON Schema standard.

- Unexpected validation errors: Double-check that incoming data matches the schema.

- Empty or missing fields: Use

requiredproperties to enforce presence of important fields.

Performance Tips

- Use for small to medium-sized data objects.

- For large datasets, validate only the necessary parts to improve performance.

- Test with sample data before running full workflows.

Supported Data Formats

- Input: Any JSON object or array.

- Output: The original data if validation passes; an error message if validation fails.

Why Use Data Validation?

Validating data before processing ensures that only well-structured, expected data flows through your pipeline. This reduces errors, improves reliability, and makes debugging easier—especially when working with third-party APIs.