AI Data Processing Node

The AI Data Processing Node is the main AI component in your workflow. It uses local language models via Ollama to analyze, transform, and generate text-based content for a wide range of tasks.

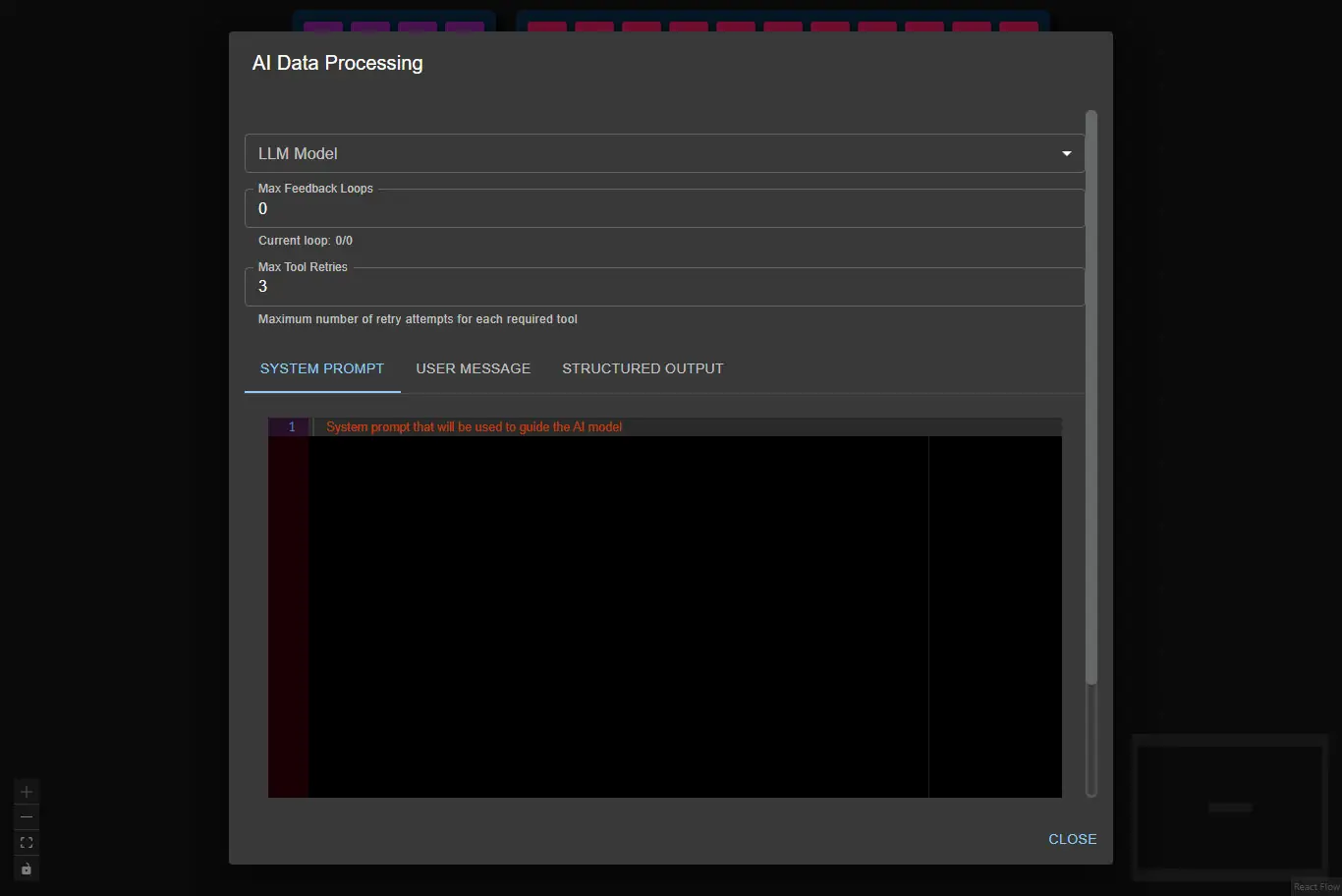

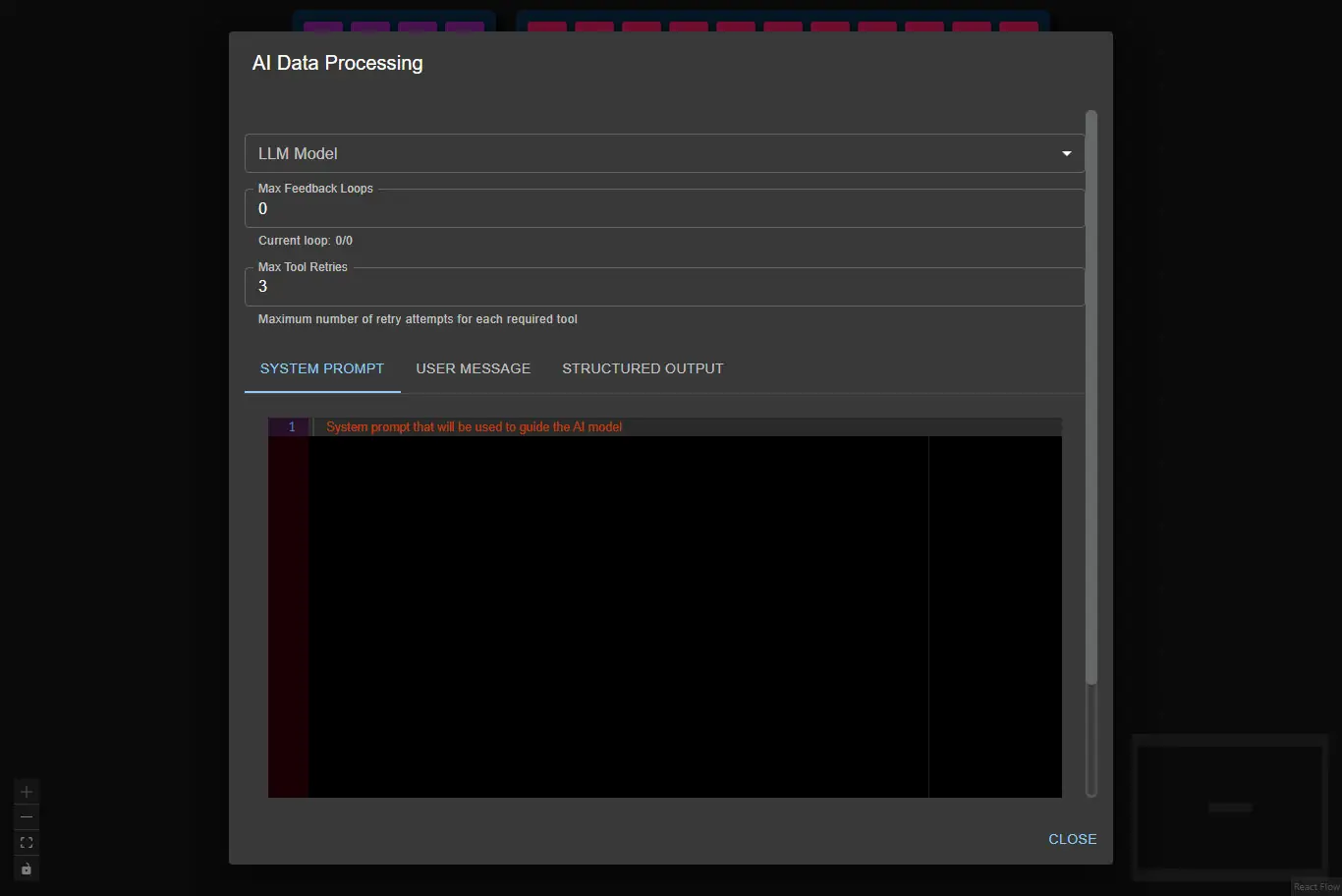

Configuration

- Model

- Max Feedback Loops

- Max Tool Retries

- System Prompt

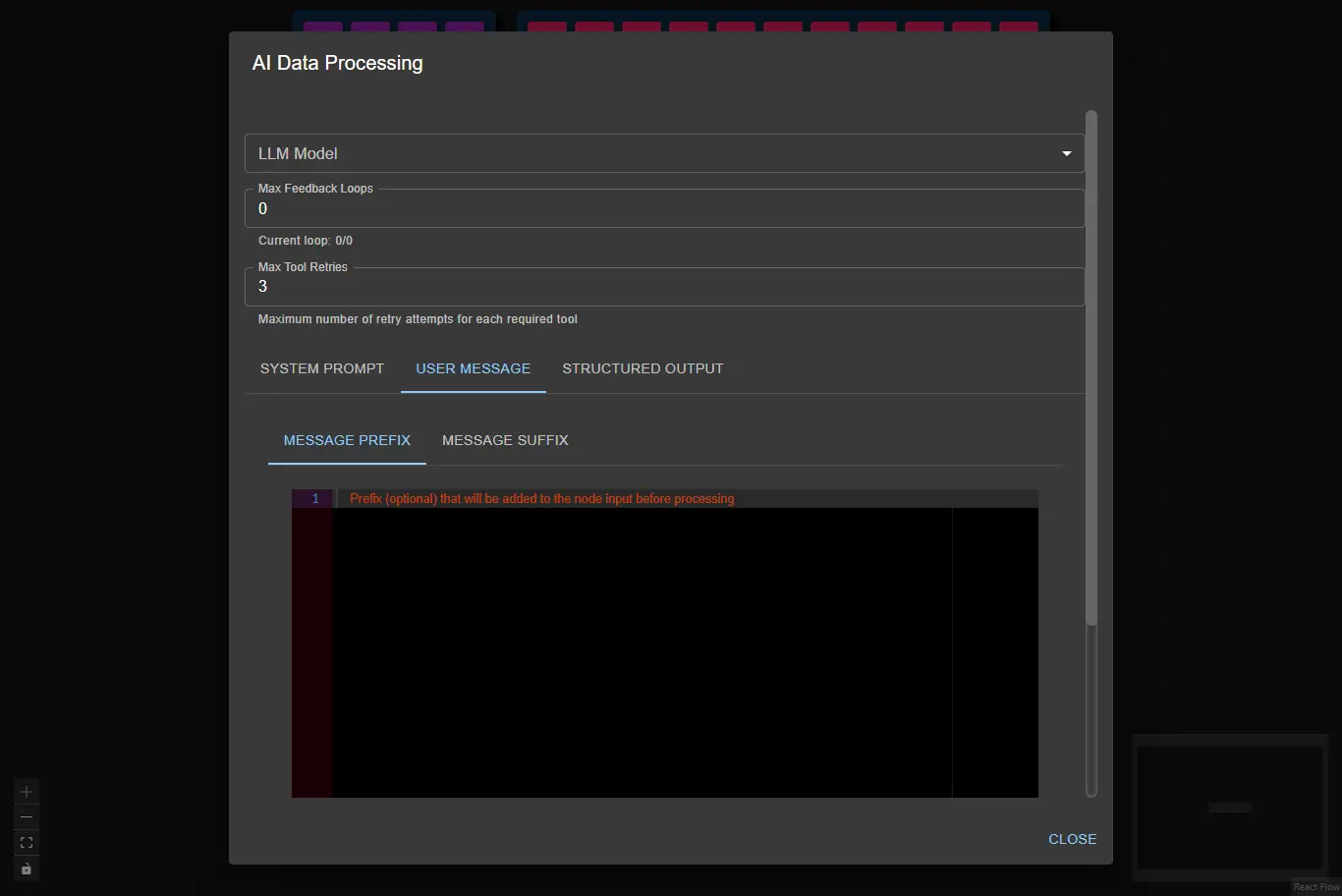

- User Message

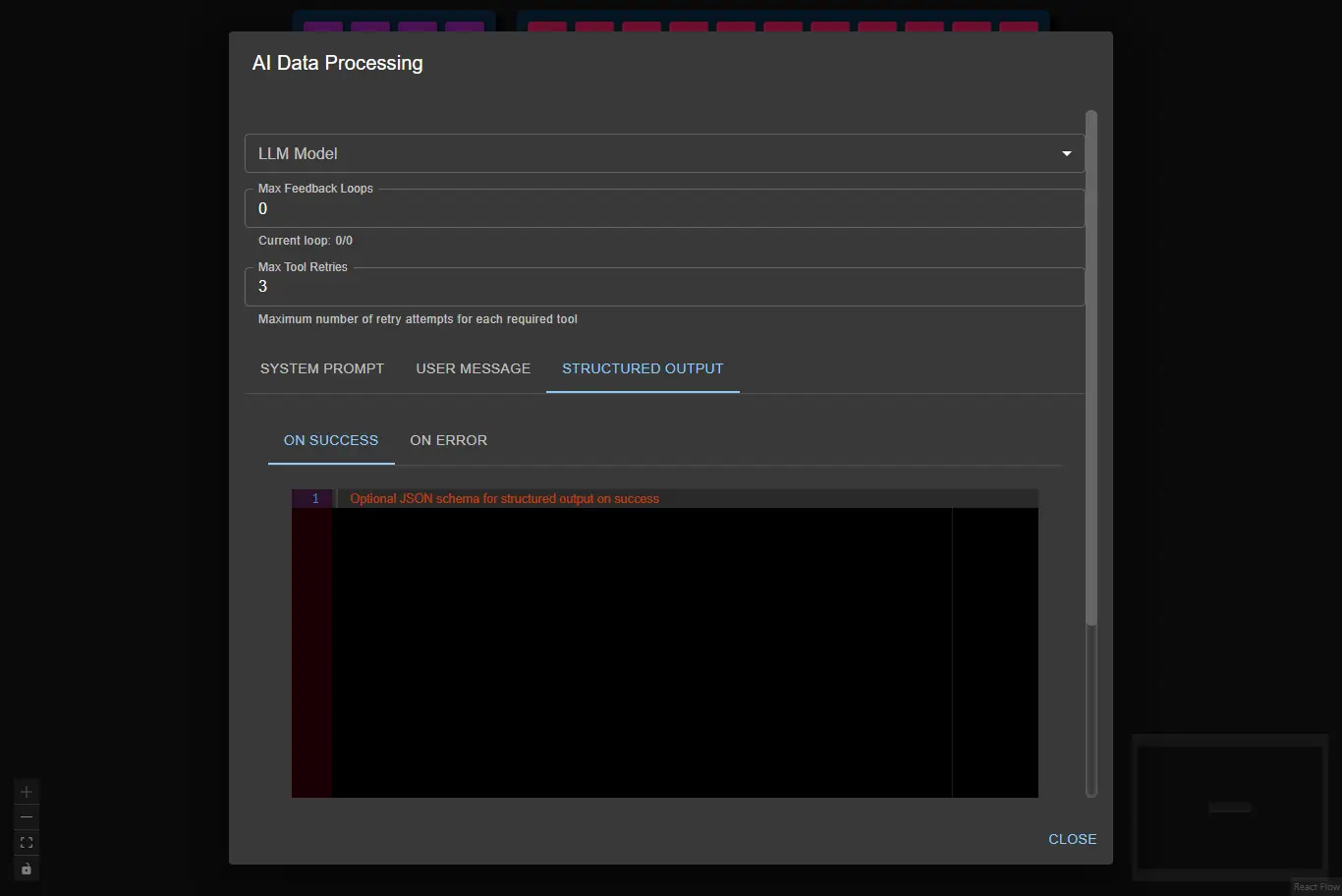

- Structured Output

Choose an Ollama model to use for processing (e.g., llama3.1:8b).

Set how many times the node should retry if it receives an error from the next node in the chain.

Set the maximum number of retry attempts for each required tool call.

If a tool call fails (for example, due to invalid parameters or an external API error), the node will retry up to this number of times before failing.

Provide instructions that guide the AI's behavior and responses.

The input the node receives can be customized before processing by adding text before or after it:

- Message Prefix: Text added before the input.

- Message Suffix: Text added after the input.

Define JSON schemas for structured responses:

- On Success: The expected format for successful responses. If not set, the output will be plain text.

- On Error: The format for error responses. This only works if On Success is also set.

Note: If you want to chain multiple AI Data Processing Nodes (for example, one node processes data and another oversees its output), set up both On Success and On Error schemas in the overseer node. If the overseer detects an error, it will trigger a feedback loop, prompting the previous node to correct its output.

See the AI Data Processing Overseer workflow for a complete example.

Example Usage

For an example usage, see the Weather Dashboard workflow and AI Data Processing Overseer workflow.

Common Use Cases

- Text Summarization: Condense long documents or articles.

- Data Analysis: Extract insights from structured data.

- Content Generation: Create articles, reports, or responses.

- Question Answering: Process queries and provide answers.

- Language Translation: Convert text between languages.

Best Practices

- Use clear, specific prompts for better results.

- Experiment with different models to find the best fit for your task.

- Use structured output schemas for reliable downstream processing.

- Set feedback loops to improve output quality.

Troubleshooting

Common Issues

- Model not found: Make sure the model is installed in Ollama.

- Slow responses: Try a smaller model or check your system resources.

- Inconsistent output: Use structured output schemas for consistency.

- Structured output not supported: Some language models do not reliably follow structured output instructions. In some cases, the Ollama server will respond with an error indicating that the selected model does not support structured output. If this happens, try using a different model or simplify your output schema.

- Date/time understanding issues: Some LLMs, especially smaller models, may have difficulty interpreting or reasoning about dates and times provided in the input. If you notice problems with date handling, try rephrasing your prompt, providing more context, or using a larger/more capable model.

Performance Tips

- Use smaller models for simple tasks.

- Write concise, focused prompts.

- Batch similar requests when possible.

- For workflows that require tool calls (such as using the Max Tool or CSV parsing), use larger LLMs (at least 8B or bigger) for lower error rates and more reliable tool execution. Smaller models may fail or produce inconsistent results when handling tool-based tasks.